Manual testing vs automation testing – The dilemma

The crux of the problem in deciding between manual testing vs automation testing is that most higher-level managers want to automate 100 percent and fixate on % automation, Right? Who hasn’t run into this paradox? Firstly, we know that everything can’t and should not be automated. During this session, we will discuss the challenges of creating well-formed test cases and criteria for identifying suitable candidates for automation scripts.

Remember this is part of our 7 episodes on Agile in QA

By the way, this is the third episode of our 7-part series on Agile from a software quality point of view. Make sure you check-out all the episodes in their sequential order to build a powerful framework in your business.

👉 Agile Sizing (Epic / Story / Task)

👉 Testing Manual vs. Automation

👉 Test Asset Management

👉 Automation Scriptwriting

👉 What is a quality gate, how to use

👉 KPI

In our first episode, we talked about Requirements, and saw their importance in developing quality software. Then in our 2nd episode, we dealt with the challenges of sizing Agile Epics, Stories, Tasks, and Spikes. Make sure to check those posts as well.

First, let’s establish a common understanding of testing terms

#Manual Testing

A set of instructions on how to test an application, with expected results, followed by a team member who reports the results in a prescribed manner. The team will use a specified process to report the test results and associated defects. Teams commonly use a specified tool or a spreadsheet to track the test cases (assets) and their execution status.

#Automation Testing

A scripted set of instructions to exercise an application. The term automation testing can be applied simply to an individual test script or complexly to being a block of test cases that are executed by a continuous deployment and making the decision of whether or not the build can be pushed to the next level.

#Exploratory Testing

This is generally freeform and a good way to involve non-traditional testers in the test process. In practice, it is best to time-box this effort and provide a free-form, central place to report observations and bugs (defects / identified deficiencies/incidents). In an Agile team, this can be paired with the retrospective ceremony. Exploratory testing is a form of Manual testing.

#Unit Testing

Targets segments of the software. It generally exercises the code to validate the expected positive and error conditions within the code. Unit testing can be either manual or automated, depending on the team.

What are the elements of a well-formed test case?

We can think about a test case creation somewhat in the same way we write an agile story using the If, When, Then format.

☑︎Assertion

Tests code/functionality for values and notes when those values come back as negative or false. For example, the test may assert that the value of an expression should be 45, and if we get anything other than 45, the result returns false.

☑︎Verification Points

Each test case should have at least one verification point. The test case can have more than one verification point. Using the agile mindset, the goal is to have the test case complex enough to cover self-contained but simple enough so the results from the test are not dependent upon too many verification points, causing a high failure rate.

☑︎Test Results

Reporting test findings and test status. Generally, a test case’s status is deemed as not started, in progress, passed (no defects), failed (defect found), or blocked (test cannot be executed due to a dependency). When a test passes, generally, there is not a lot of documentation due to the expected and actual test results being in agreement. When a test case is blocked or fails, notes need to be added to document how the actual results vary from the expected results.

☑︎Defect*

Also known as incidents (defects in production) or bugs, when product does not perform as expected.

*Note: On an Agile team, testing tasks are built into stories, and defects may be identified and resolved outside of a formal defect tracking process as part of the story completion process.

Going into the next part of our discussion, we need to pause to have a slightly theoretical conversation. An easy way to start this discussion is by asking what would cause a test case to be not fit for automation:

- Single use test case

- Edge case test case

- A test case which has many dependencies

Automation test case development is generally reserved for repeatable, well understood tests which will be executed multiple times.

When the point of the test case is to test the validity of data, the test case may be a framework, which iterates through the data row by row. In this case the test designer decides if the test results will be reported as a test case per row or as the complete data set.

How tests are grouped, common terms, and test execution strategies

#Test Case

A test (manual or automated) with an individual objective and at least one verification point

#Test Script

An automated test with an individual objective and at least one verification point.

#Test Set

A group of tests, generally executed together, targeting specific functionality and reported on as a test set.

#Functional Testing

Usually manual or exploratory testing. Manual functional test cases generally contain the following parts:

- Objective – why the test case is important. This can also be referred to as an assertion.

- Detailed Instructions on how to complete the test, and specified verification points.

- Expected Results of the correct state of the validation points after the test has been completed.

- Actual Results: after the test has been completed, documentation of the actual results. If a verification point does not end in the expected state, recording details of the actual end state of the verification point. The defect tracking information will be included if a defect is written up.

#Illustration

An example test case for a login scenario for a web page may contain the following information:

Objective: Log in to XYZ application using a predetermined username and password to access the application’s Homepage.

Detailed Instructions: (Note: The instructions may be broken into steps, with a verification point on each step)

Open browser window with URL WWW.Sample.COM/Login

User Name: Demo1

Password: Password1

Press the Login button

Verification Point: The login page will load with username and password input fields and a Login/Cancel button. Login page will accept username and password, and will load Homepage when the login button is pressed.

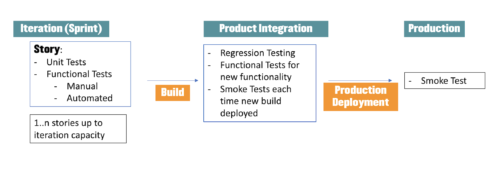

Now that we understand some basic terms and the general format of a test case, let’s talk about test execution strategies. If the team is following a test drive design approach, Unit tests will be written and executed against the code while it is being created. Once the unit tests pass, the code should be ready for integration.

Test execution strategy

#Functional tests

Are executed against the delivered code. For many teams the first round of testing against a code base is manual while they gain experience, knowledge, and expertise. Once the functional testing on delivered code is well understood, teams generally identify candidates for automation.

#Full Regression tests

The functionality of the full application. It may be a combination of manual testing and automated testing. The advantage is the team has assurance the full application is working as expected. On the other hand, the disadvantage is the length of time to run the tests.

#Targeted Regression test

Is when the regression is targeted toward new functionality and base level of application functionality. The reason behind this is to shorten the test cycle, especially if the application has a large library of tests.

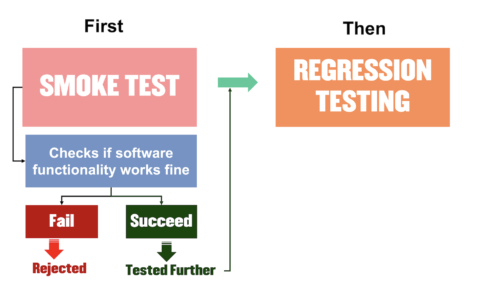

#Smoke Test

So, when should we use manual testing vs automation testing?

The question we set out to answer at the beginning of this session is when to use manual testing vs automation testing. Based on the information provided in our discussion, we use automation testing in places where the effort and costs to create the automation is targeting critical functionality. Furthermore, we use automation testing for tests which will be executed frequently, and tests that require the least amount of effort to automate, and maintain.

Universal rules

Whether you’re automating unit tests, functional, regression or smoke, these rules apply.

- Targeting frequently used tests provide the highest ‘bang for the buck’ for your automation efforts.

- Automation tests, like the underlying code, have to be maintained, have valid data inputs, and be modified when the underlying functionality is modified. Therefore, you’d also want to ensure that you automate those parts of the software that are less apt to be changed. This way, you can avoid going back and modifying your automation scripts. Of course, there are ways to develop your automation scripts to be more adaptable by creating reusable objects or using parameters so the functions or data changes can be accommodated quickly, with minimal impact and maintenance to the existing automation code maintenance.

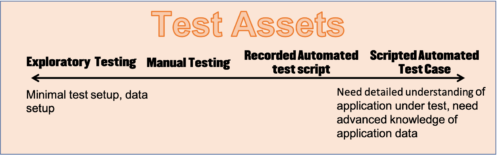

Dispelling manual testing vs automation testing mentality

As usual, the many factors to decide if automation is a viable solution have to be balanced with common sense. The graphic below illustrates this best. At the end of the day, it’s easy to see this does not result in a 100% automation test strategy, as there will always be some manual testing.

Detailed exploration of the topic

Of course, there are many tools, some AI or machine learning based, that boast automatic object recognition and self-learning. But in the end, you still have to understand the application and the domain deeply and be able to think like an end user in order to develop meaningful test automation scripts. At XBOSoft, we firmly believe that “You can’t automate what you don’t understand”. We could be wrong, but in the last 15 years, we’ve developed a strong case for both manual testing and test automation based on understanding the domain and application. As such, our team has deep expertise on:

- Game testing

- Financial & Banking

- Loan application testing

- Retails&Sales (POS)

- Healthcare software

- Technology

- Manufacturing

- IT&Management

So, if you want more than button pushing and clicking for your QA, and adaptable automation scripts that cover not only core functionality but emulate what a real user would do, come to XBOSoft.

Watch Manual Testing vs Automation Testing Guide

In the next episode

Thank you for taking the time to learn more about how to manual testing vs automation testing. In the next XBOTalk episode we will explore Test Asset Management exploring:

- Storage of Test Assets

- Organizing Test Assets into reusable test sets

Need Help with Your Automation Testing?

Most Popular

|

A Quick Introduction To Jira

By: Jimmy Florent |

|

|

Visual Regression Testing Market Challenges and Opportunities

By: Philip Lew |

|

|

Agile Testing Solution Market Continues to Grow – What Are The Key Challenges?

By: Philip Lew |

|

Contact us today to automate your software testing.

We Recommend

Agile Software Testing

XBOSoft offers a unique blend of agile testing expertise for companies that are either currently in waterfall and converting their development methodologies to Agile, as well as those with various hybrid and popular Agile testing methodologies such as Scrum and Kanban.

The Best Guide To Agile Requirements Gathering

By Jimmy Florent | September 5, 2022

In this session we are going to cover agile requirements gathering, their importance in developing quality software, and how you as a QA engineer can make an impact.

The 5 Key Elements To Successful Agile Sizing

By Jimmy Florent | September 5, 2022

Discover the challenges of Agile sizing, Epics, Stories, Tasks, and Spikes. We will start the discussion by defining the Agile components and then discuss the different sizing processes used and the thought process behind the philosophies.

This article is well written and I recommend that you read it carefully.